In the fast-evolving world of artificial intelligence, the pursuit of more intelligent, responsive, and context-aware systems has led to groundbreaking techniques. One such innovation making waves is Retrieval-Augmented Generation (RAG). This powerful approach to natural language processing combines the capabilities of traditional language models with real-time information retrieval, offering a compelling solution for tackling complex tasks like question answering, summarization, and even content creation.

But what exactly does RAG mean in AI, and how does it work under the hood? Let’s take a journey into the mechanics and magic of Retrieval-Augmented Generation.

What is Retrieval-Augmented Generation?

Retrieval-Augmented Generation (RAG) is a method that enhances the accuracy and factual grounding of language generation models by integrating a retrieval step during the generation process. Instead of relying solely on pre-trained knowledge, a RAG model can “look things up” in a database or knowledge corpus before forming a response.

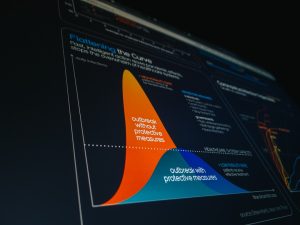

Traditional language models like GPT or BERT generate output based on patterns they learned during training. While this works well within their knowledge boundaries, these models struggle with:

- Factual inaccuracies

- Knowledge that postdates their training data

- Obscure or domain-specific topics

RAG addresses these limitations by allowing the model to access external data sources at inference time. This significantly boosts the model’s real-world applicability.

How Retrieval-Augmented Generation Works

At a high level, RAG models operate in two primary stages: retrieval and generation. These components work as a pipeline to produce accurate and contextually rich responses. Here is a closer look at how each phase functions:

1. Retrieval Phase

When a user inputs a query (e.g., “What is the capital of Switzerland?”), the model performs a search over an indexed corpus—this could be structured documents, a knowledge base, or even scraped web pages. The retrieval system uses vector-based representations (embeddings) to find the passages most relevant to the user’s query.

The retrieved documents are then passed along to the generation phase.

2. Generation Phase

The selected documents help set the context that guides how the output is generated. A language model, often transformer-based (like BERT, T5, or GPT), takes the user query combined with the top-retrieved documents and generates a coherent and informative response.

Crucially, this approach means that the model is not limited to just what it “remembers.” Instead, it temporarily “learns” from live documents during each query, enhancing factual accuracy and relevance.

Why Use RAG Over Traditional LLMs?

Even state-of-the-art large language models have limitations, especially when it comes to up-to-date or obscure information. Here’s why the RAG architecture is gaining popularity:

- Increased Accuracy: The integration of fresh data minimizes hallucination and factual errors.

- Up-to-Date Responses: Because the retrieval corpus can be updated constantly, RAG models don’t become outdated like static models.

- Domain Adaptability: By pointing the retrieval mechanism to a domain-specific database, the model can effectively operate in specialized fields like law, medicine, or finance.

- Memory Efficiency: Instead of needing huge internal parameters to “memorize” everything, RAG relies on an external database, making it more memory-efficient per task.

Applications of RAG in the Real World

The practical use-cases of RAG are vast and growing. Below are a few notable areas where Retrieval-Augmented Generation is making an impact:

1. Enterprise Knowledge Assistants

Companies use RAG-powered assistants that can pull in documents from internal databases to answer HR, operations, and compliance-related questions accurately, even in rapidly changing environments.

2. Legal and Healthcare AI

A highly specialized field like law requires accurate referencing of past cases and legal statutes. Similarly, medical AI effort requires up-to-date clinical guidelines and drug information. RAG allows these systems to stay current while generating information-rich summaries or suggestions.

3. Customer Support Chatbots

Instead of pre-defining limited conversation trees, customer support bots powered by RAG can draw answers from support tickets, manuals, and FAQs, resulting in highly accurate and context-aware responses.

4. Scientific and Academic Research

Scientists and students can leverage RAG-based tools to summarize literature, verify citations, or even brainstorm ideas based on the latest research papers available in digital libraries.

Challenges and Limitations of RAG

While RAG models offer significant advantages, they are not without challenges. Some limitations include:

- Latency: Retrieving and parsing external documents during inference can introduce delays compared to standard language models.

- Dependence on Corpus Quality: If the retrieval base contains inaccurate, biased, or outdated information, the generation output will reflect those flaws.

- Complexity in Implementation: Building a RAG system requires managing not just a language model but also a performant and scalable retrieval engine.

These challenges are actively being addressed through innovations in document indexing, better filtering, and hybrid models that combine multiple knowledge sources for redundancy and validation.

Popular Architectures and Tools Leveraging RAG

Several AI frameworks and libraries have adopted the RAG methodology to great effect. A few notable mentions include:

- Facebook AI’s Original RAG Architecture: This was one of the first public implementations combining dense passage retrieval with generative models.

- Haystack by Deepset: An open-source NLP framework that provides all the tools needed to build production-ready RAG systems.

- LangChain and LlamaIndex: Tools designed to create retrieval-augmented systems using large language models efficiently.

These platforms continue to attract developers, data scientists, and enterprises looking to embed smarter NLP features into their products.

The Future of Retrieval-Augmented Generation

As data continues to grow in both size and complexity, the importance of on-demand, accurate retrieval becomes critical. RAG systems are well-positioned to be core components in the next generation of intelligent applications. Innovations like:

- Improved semantic search

- Hybrid retrieval (combining dense and sparse techniques)

- Federated retrieval for privacy-preserving AI

- Integration of multimodal data (text, image, video)

…will continue to shape how RAG evolves and integrates into our daily tools and services.

Conclusion

Retrieval-Augmented Generation represents a major step forward in the field of natural language processing and AI-driven communication. By merging the power of search with intelligent text generation, RAG systems provide better accuracy, relevance, and domain flexibility than traditional language models alone.

Whether it’s in chatbots, educational tools, healthcare, or research, RAG makes AI not only smarter but also more accountable to real-world facts. As the demand for trustworthy AI grows, so too will the prominence of RAG in shaping how machines understand and respond to the world around them.