In the dynamic world of search engine optimization (SEO), staying visible in search results hinges not only on the quality of your content but also on how frequently it is revisited or re-indexed by search engines. This is where the concept of indexing triggers becomes critical. Successful indexing ensures that your latest content updates, organizational shifts, and structural changes are appropriately captured by search engines. Among the most influential triggers are content freshness signals, the use of the lastmod tag in sitemaps, and strategies involving public relations (PR) and internal link hubs. This article explores these mechanisms in-depth, offering practical guidance for SEO professionals aiming to optimize crawl behavior and indexing efficiency.

Understanding Indexing Triggers

Indexing triggers are cues or signals that prompt search engine bots—most notably Googlebot—to revisit and potentially re-index a particular page. Without adequate triggering, even significant site updates might remain unnoticed by search engines for extended periods. Failing to be indexed quickly can lead to visibility issues, missed traffic opportunities, and an overall decline in performance metrics.

Effective use of indexing triggers ensures that:

- New content gets discovered and ranked swiftly

- Updated information replaces outdated data across the SERPs

- Pages gain sustained or improved visibility over time

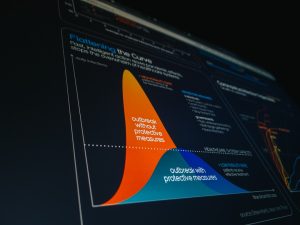

Freshness Signals: Keeping Content Relevant

Search engines thrive on delivering timely, authoritative answers. As such, they prioritize sites that demonstrate relevance and activity. Freshness signals are among the most powerful indexing triggers. These signals indicate that a site or specific piece of content has been updated recently, which can be interpreted as a cue to re-crawl the page.

Here are key freshness signals that can influence indexing:

- Content Updates: Frequent edits, additions, or rewrites to existing content provide a strong signal.

- Comment Activity: User-generated content like comments or reviews can suggest ongoing engagement.

- Publication Dates: Clearly displayed dates aligned with actual content changes signal recency.

- Feed Activity: RSS or news feeds with regular updates can enhance crawl consideration.

However, not all updates are perceived equally. Trivial edits, such as changing a comma or adjusting meta descriptions, are unlikely to trigger frequent reindexing unless supported by more robust update signals.

Lastmod in XML Sitemaps: A Direct Nudge to Crawlers

The lastmod tag in XML sitemaps is a more direct method for indicating when a URL was last modified. When used correctly, it allows webmasters to communicate directly with search engine crawlers about page changes. This tag helps search engines prioritize which URLs to re-crawl, fostering more efficient indexing behavior.

Best practices for effective implementation of lastmod include:

- Accuracy: Ensure that timestamps reflect actual content changes, not mere backend updates.

- Consistency: Align changes in lastmod with front-end user-visible updates to maintain credibility.

- Automation: Use content management system (CMS) logic or scripts to auto-update lastmod when true changes occur.

- Validation: Regularly validate your sitemap via Google Search Console or similar tools to avoid crawl inefficiencies.

As Google has confirmed, it does consider the lastmod tag especially when combined with signals derived from historical crawl data. This makes it a vital tool in managing large-scale sites with thousands or even millions of URLs.

PR Campaigns and Internal Link Hubs

In addition to on-page and sitemap signals, off-page and architectural cues also fuel indexing. Two powerful, often underutilized strategies in this regard are public relations (PR) and internal linking structures — particularly hub pages.

1. Public Relations as an Indexing Trigger

High-authority backlinks generated from PR campaigns can rapidly trigger crawl activity, especially when they originate from frequently indexed domains such as news sites, tech blogs, or curated publications. A press release picked up by a leading news aggregator can cause hundreds of bots to scan linked-to resources within hours.

Here’s how to leverage PR effectively:

- Create Link-worthy Announcements: Launches, statistics, partnerships, or research findings tend to perform well.

- Build Relationships: Collaborate with journalists and editors to increase linkback likelihood.

- Choose Strategic Link Targets: Direct media links to newly created or recently updated hub pages for indexing benefits.

2. Internal Link Hubs to Guide Crawl Paths

Internal link hubs—central pages that efficiently distribute authority to related content—serve as strategic entry points for bots. By funneling link equity to a curated list of child pages, hubs make it easier for crawlers to discover and prioritize URLs within your architecture.

Advantages of using internal hubs include:

- Improved Crawl Distribution: Search bots are more likely to follow logical, clearly structured paths.

- Scalability: Reduces crawler effort needed to reach deeper or newer generated pages.

- Contextual Relevance: Helps reinforce semantic relationships between content clusters.

For optimal performance, make sure that:

- Hub pages are linked from the main navigation or footer

- Updated content is immediately linked from a high-authority hub

- The hubs themselves are frequently revised to indicate activity

Combining Signals to Influence Crawl Efficiency

When employed individually, the techniques discussed—freshness signals, lastmod tags, PR campaigns, and internal hubs—can nudge search engine crawlers. But their impact is significantly enhanced when strategically integrated into a broader indexing game plan.

Consider the following workflow for a new content asset:

- Publish the content with structured metadata, a clear publication date, and optimize on-page SEO.

- Link the content from a relevant hub page, and surface it within site navigation as appropriate.

- Update your XML sitemap with an accurate lastmod entry.

- Distribute the URL via a press release targeted to high-authority platforms.

- Promote through social channels and RSS feeds to generate engagement signals.

This multi-channel approach maximizes your chances of seeing timely indexing by signaling change through diverse vectors—on-page, technical, off-site, and structural.

Monitoring and Adjusting Crawl Behavior

No strategy is complete without proper monitoring. Tools like Google Search Console, Bing Webmaster Tools, and third-party solutions like Screaming Frog or Sitebulb can help you track indexing patterns and crawl rates. Look for anomalies such as:

- Dropped URLs from the index

- Long time intervals between crawls on key content

- Sitemap parsing errors or misreported lastmod dates

Regular auditing allows you to refine your strategy and adapt to algorithmic changes. It also enables you to spot whether your indexing triggers are having the desired impact.

Conclusion

Effective SEO is not just about creating great content but also about ensuring that content is discoverable, indexable, and served to the right audience at the right time. By leveraging indexing triggers such as freshness signals, the lastmod tag in sitemaps, and strategic use of PR and internal link hubs, webmasters can foster timely and consistent indexing behavior.

In today’s competitive digital landscape, knowing how to influence crawler behavior is not just a technical skill—it’s a strategic differentiator. The most successful SEO professionals master the art of layer-cake signaling: visible freshness, structured directives via sitemaps, content amplification through external channels, and intelligent site architecture through hubs. Each reinforces the others and creates a robust ecosystem for continuous discovery and visibility.

Stay aware, stay agile, and most importantly, keep feeding the signals. Because in SEO, staying still is the fastest way to get left behind.